Copyright © 2023 The Apache Software Foundation

License and Disclaimer

The ASF licenses this documentation to you under the Apache License, Version 2.0 (the "License"); you may not use this documentation except in compliance with the License. You may obtain a copy of the License at

Unless required by applicable law or agreed to in writing, this documentation and its contents are distributed under the License on an "AS IS" BASIS, WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied. See the License for the specific language governing permissions and limitations under the License.

Trademarks

All terms mentioned in the text that are known to be trademarks or service marks have been appropriately capitalized. Use of such terms in this book should not be regarded as affecting the validity of the the trademark or service mark.

1. Apache UIMA Ruta Overview

1.1. What is Apache UIMA Ruta?

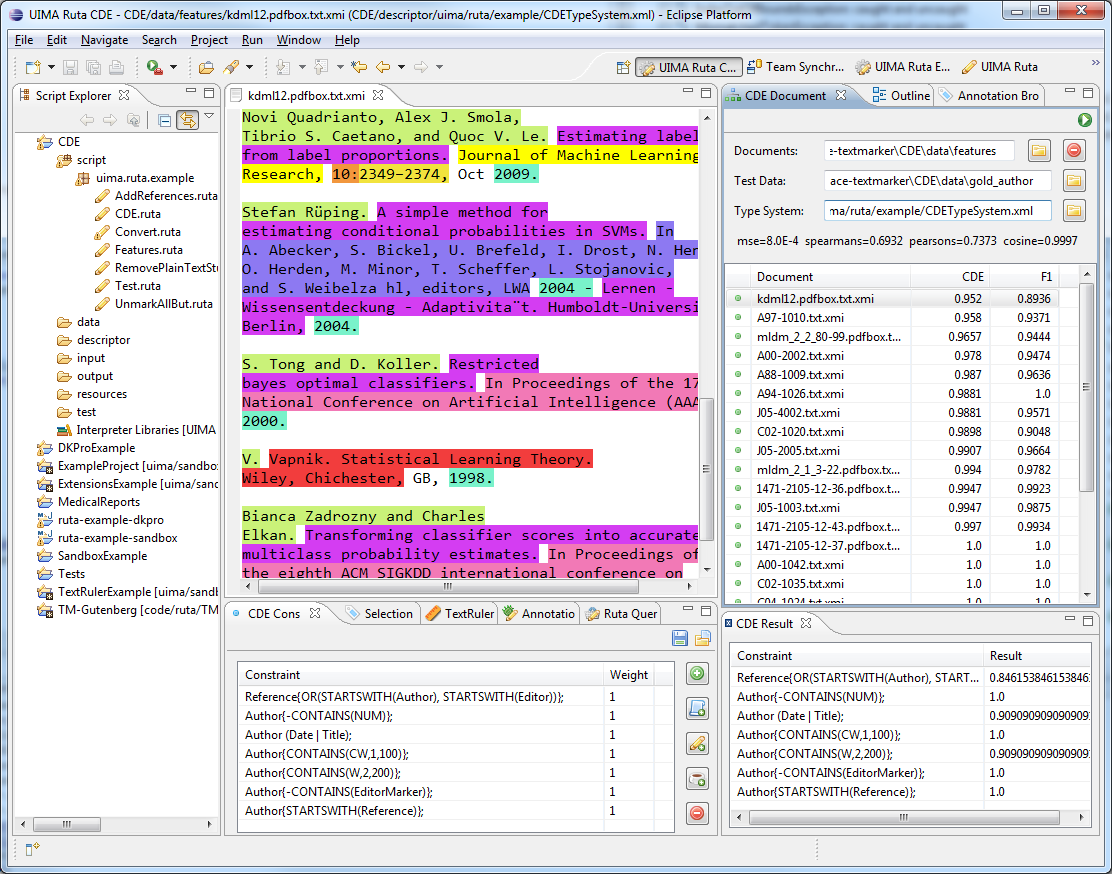

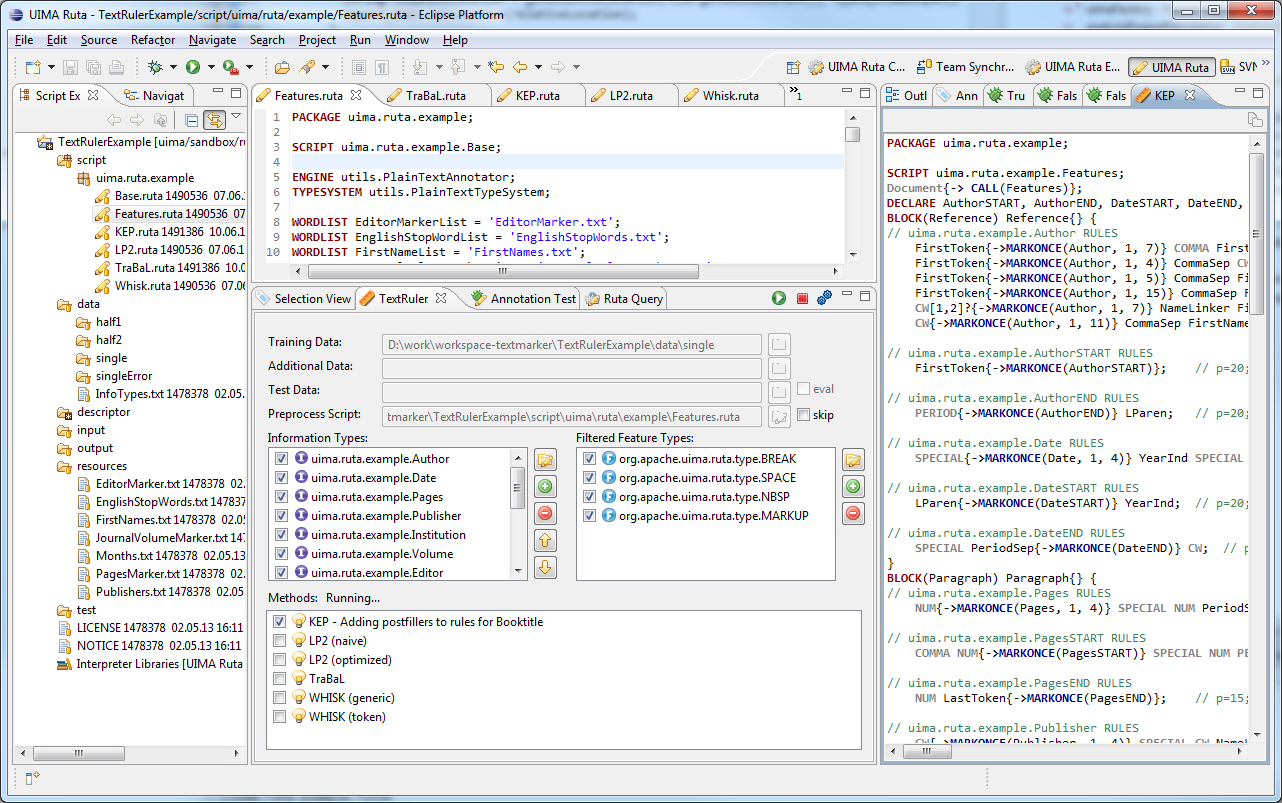

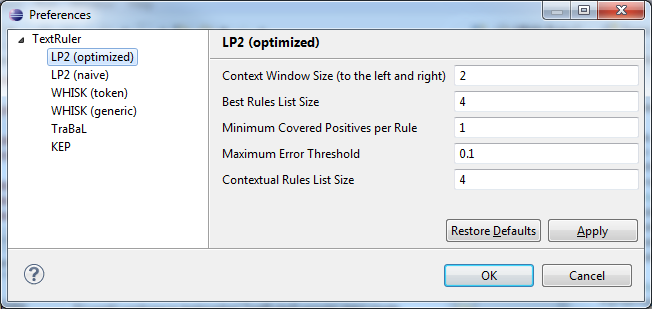

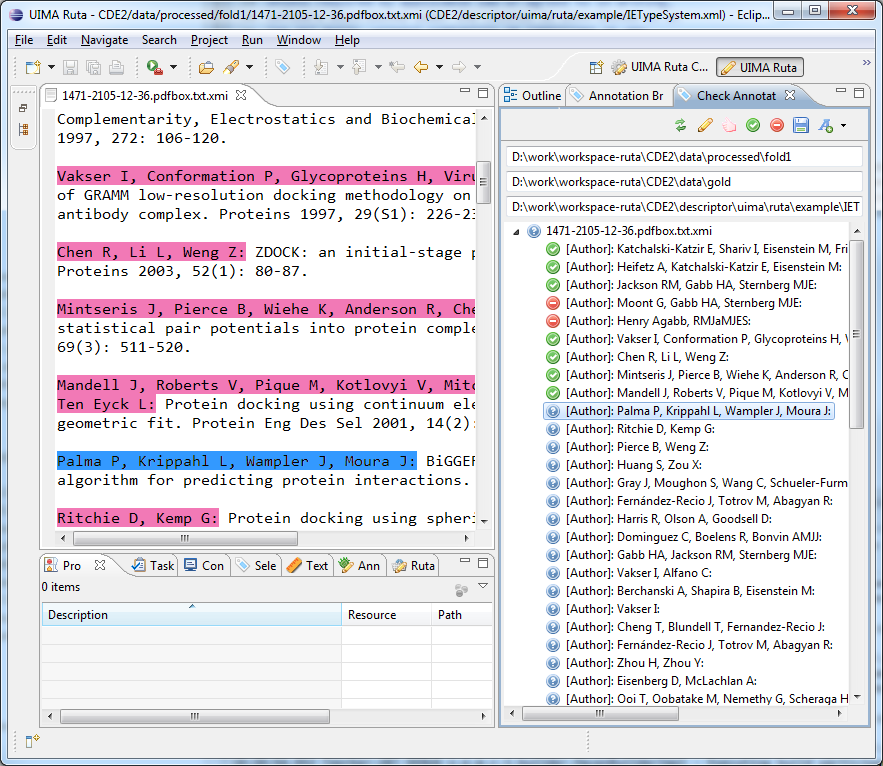

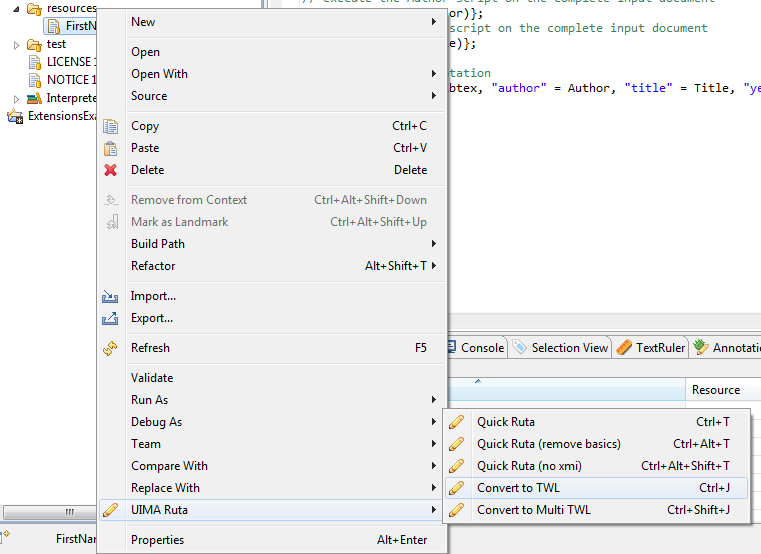

Apache UIMA Ruta™ is a rule-based script language supported by Eclipse-based tooling. The language is designed to enable rapid development of text processing applications within Apache UIMA™. A special focus lies on the intuitive and flexible domain specific language for defining patterns of annotations. Writing rules for information extraction or other text processing applications is a tedious process. The Eclipse-based tooling for UIMA Ruta, called the Apache UIMA Ruta Workbench, was created to support the user and to facilitate every step when writing UIMA Ruta rules. Both the Ruta rule language and the UIMA Ruta Workbench integrate smoothly with Apache UIMA.

1.2. Getting started

This section gives a short roadmap how to read the documentation and gives some recommendations how to start developing UIMA Ruta-based applications. This documentation assumes that the reader knows about the core concepts of Apache UIMA. Knowledge of the meaning and usage of the terms “CAS”, “Feature Structure”, “Annotation”, “Type”, “Type System” and “Analysis Engine” is required. Please refer to the documentation of Apache UIMA for an introduction.

Unexperienced users that want to learn about UIMA Ruta can start with the next two sections: Section 1.3 gives a short overview of the core ideas and features of the UIMA Ruta language and Workbench. This section introduces the main concepts of the UIMA Ruta language. It explains how UIMA Ruta rules are composed and applied, and discusses the advantages of the UIMA Ruta system. The following Section 1.4 approaches the UIMA Ruta language using a different perspective. Here, the language is introduced by examples. The first example starts with explaining how a simple rule looks like, and each following example extends the syntax or semantics of the UIMA Ruta language. After the consultation of these two sections, the reader is expected to have gained enough knowledge to start writing her first UIMA Ruta-based application.

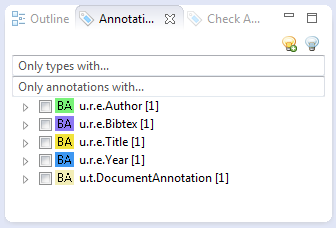

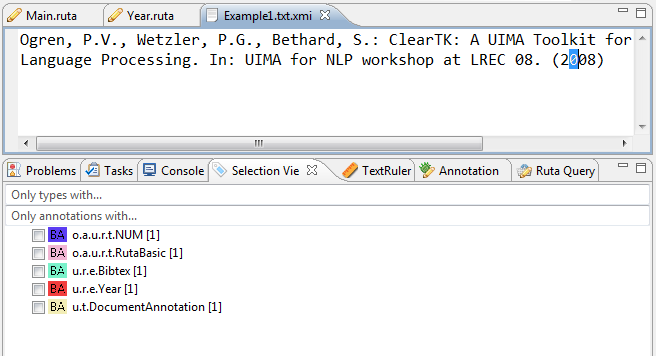

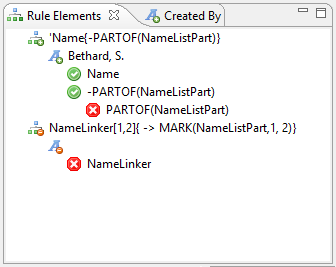

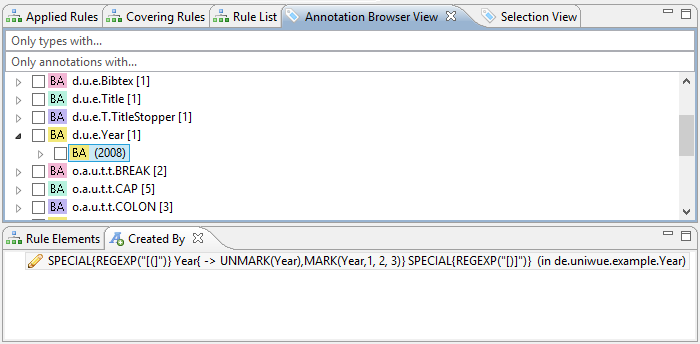

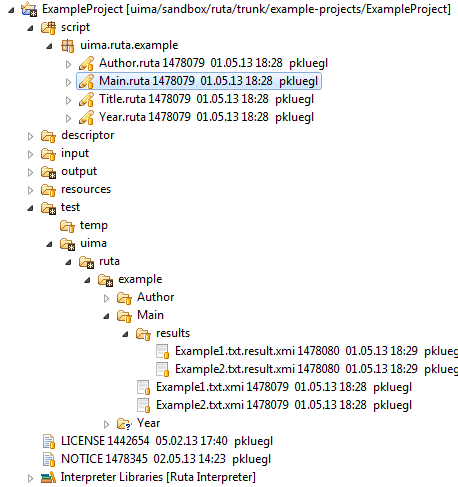

The UIMA Ruta Workbench was created to support the user and to facilitate the development process. It is strongly recommended to use this Eclipse-based IDE since it, for example, automatically configures the component descriptors and provides editing support like syntax checking. Section 3.1 describes how the UIMA Ruta Workbench is installed. UIMA Ruta rules can also be applied on CAS without using the UIMA Ruta Workbench. Section 4.1 contains examples how to execute UIMA Ruta rules in plain java. A good way to get started with UIMA Ruta is to play around with an exemplary UIMA Ruta project, e.g., “ExampleProject” in the example-projects of the UIMA Ruta source release. This UIMA Ruta project contains some simple rules for processing citation metadata.

1.3. Core Concepts

The UIMA Ruta language is an imperative rule language extended with scripting elements. A UIMA Ruta rule defines a pattern of annotations with additional conditions. If this pattern applies, then the actions of the rule are performed on the matched annotations. A rule is composed of a sequence of rule elements and a rule element essentially consist of four parts: A matching condition, an optional quantifier, a list of conditions and a list of actions. The matching condition is typically a type of an annotation by which the rule element matches on the covered text of one of those annotations. The quantifier specifies, whether it is necessary that the rule element successfully matches and how often the rule element may match. The list of conditions specifies additional constraints that the matched text or annotations need to fulfill. The list of actions defines the consequences of the rule and often creates new annotations or modifies existing annotations. They are only applied if all rule elements of the rule have successfully matched. Examples for UIMA Ruta rules can be found in Section 1.4.

When UIMA Ruta rules are applied on a document, respectively on a CAS, then they are always grouped in a script file. However, a UIMA Ruta script file does not only contain rules, but also other statements. First of all, each script file starts with a package declaration followed by a list of optional imports. Then, common statements like rules, type declarations or blocks build the body and functionality of a script. Section 4.1 gives an example, how UIMA Ruta scripts can be applied in plain Java. UIMA Ruta script files are naturally organized in UIMA Ruta projects, which is a concept of the UIMA Ruta Workbench. The structure of a UIMA Ruta project is described in Section 3.3

The inference of UIMA Ruta rules, that is the approach how the rules are applied, can be described as imperative depth-first matching. In contrast to similar rule-based systems, UIMA Ruta rules are applied in the order they are defined in the script. The imperative execution of the matching rules may have disadvantages, but also many advantages like an increased rate of development or an easier explanation. The second main property of the UIMA Ruta inference is the depth-first matching. When a rule matches on a pattern of annotations, then an alternative is always tracked until it has matched or failed before the next alternative is considered. The behavior of a rule may change, if it has already matched on an early alternative and thus has performed an action, which influences some constraints of the rule. Examples, how UIMA Ruta rules are applied, are given in Section 1.4.

The UIMA Ruta language provides the possibility to approach an annotation problem in different ways. Let us distinguish some approaches as an example. It is common in the UIMA Ruta language to create many annotations of different types. These annotations are probably not the targeted annotation of the domain, but can be helpful to incrementally approximate the annotation of interest. This enables the user to work “bottom-up” and “top-down”. In the former approach, the rules add incrementally more complex annotations using simple ones until the target annotation can be created. In the latter approach, the rules get more specific while partitioning the document in smaller segments, which result in the targeted annotation, eventually. By using many “helper”-annotations, the engineering task becomes easier and more comprehensive. The UIMA Ruta language provides distinctive language elements for different tasks. There are, for example, actions that are able to create new annotations, actions that are able to remove annotations and actions that are able to modify the offsets of annotations. This enables, amongst other things, a transformation-based approach. The user starts by creating general rules that are able to annotate most of the text fragments of interest. Then, instead of making these rules more complex by adding more conditions for situations where they fail, additional rules are defined that correct the mistakes of the general rules, e.g., by deleting false positive annotations. Section 1.4 provides some examples how UIMA Ruta rules can be engineered.

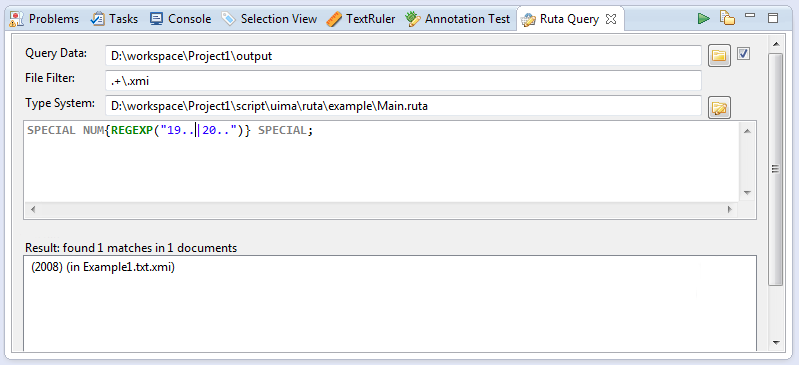

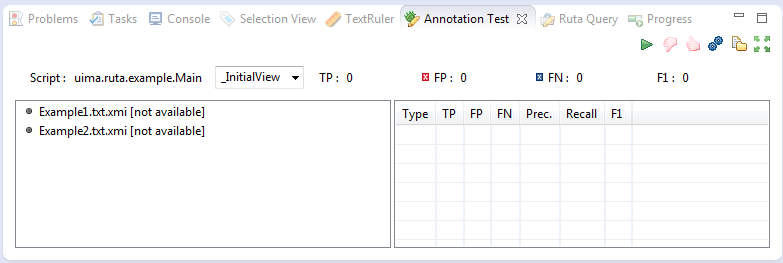

To write rules manually is a tedious and error-prone process. The UIMA Ruta Workbench was developed to facilitate writing rules by providing as much tooling support as possible. This includes, for example, syntax checking and auto completion, which make the development less error-prone. The user can annotate documents and use these documents as unit tests for test-driven development or quality maintenance. Sometimes, it is necessary to debug the rules because they do not match as expected. In this case, the explanation perspective provides views that explain every detail of the matching process. Finally, the UIMA Ruta language can also be used by the tooling, for example, by the “Query” view. Here, UIMA Ruta rules can be used as query statements in order to investigate annotated documents.

UIMA Ruta smoothly integrates with Apache UIMA. First of all, the UIMA Ruta rules are applied using a generic Analysis Engine and thus UIMA Ruta scripts can easily be added to Apache UIMA pipelines. UIMA Ruta also provides the functionality to import and use other UIMA components like Analysis Engines and Type Systems. UIMA Ruta rules can refer to every type defined in an imported type system, and the UIMA Ruta Workbench generates a type system descriptor file containing all types that were defined in a script file. Any Analysis Engine can be executed by rules as long as their implementation is available in the classpath. Therefore, functionality outsourced in an arbitrary Analysis Engine can be added and used within UIMA Ruta.

1.4. Learning by Example

This section gives an introduction to the UIMA Ruta language by explaining the rule syntax and inference with some simplified examples. It is recommended to use the UIMA Ruta Workbench to write UIMA Ruta rules in order to gain advantages like syntax checking. A short description how to install the UIMA Ruta Workbench is given here. The following examples make use of the annotations added by the default seeding of the UIMA Ruta Analysis Engine. Their meaning is explained along with the examples.

The first example consists of a declaration of a type followed by a simple rule. Type declarations always start with the keyword “DECLARE” followed by the short name of the new type. The namespace of the type is equal to the package declaration of the script file. If there is no package declaration, then the types declared in the script file have no namespace. There is also the possibility to create more complex types with features or specific parent types, but this will be neglected for now. In the example, a simple annotation type with the short name “Animal” is defined. After the declaration of the type, a rule with one rule element is given. UIMA Ruta rules in general can consist of a sequence of rule elements. Simple rule elements themselves consist of four parts: A matching condition, an optional quantifier, an optional list of conditions and an optional list of actions. The rule element in the following example has a matching condition “W”, an annotation type standing for normal words. Statements like declarations and rules always end with a semicolon.

DECLARE Animal;

W{REGEXP("dog") -> MARK(Animal)};The rule element also contains one condition and one action, both surrounded by curly parentheses. In order to distinguish conditions from actions they are separated by “→”. The condition “REGEXP("dog")” indicates that the matched word must match the regular expression “dog”. If the matching condition and the additional regular expression are fulfilled, then the action is executed, which creates a new annotation of the type “Animal” with the same offsets as the matched token. The default seeder does actually not add annotations of the type “W”, but annotations of the types “SW” and “CW” for small written words and capitalized words, which both have the parent type “W”.

There is also the possibility to add implicit actions and conditions, which have no explicit name, but consist only of an expression. In the part of the conditions, boolean expressions and feature match expression can be applied, and in the part of the actions, type expressions and feature assignment expression can be added. The following example contains one implicit condition and one implicit action. The additional condition is a boolean expression (boolean variable), which is set to “true”, and therefore is always fulfills the condition. The “MARK” action was replaced by a type expression, which refer to the type “Animal”. The following rule shows, therefore, the same behavior as the rule in the last example.

DECLARE Animal;

BOOLEAN active = true;

W{REGEXP("dog"), active -> Animal};There is also a special kind of rules, which follow a different syntax and semantic, and enables a simplified creation of annotations based on regular expression. The following rule, for example, creates an “Animal” annotation for each occurrence of “dog” or “cat”.

DECLARE Animal;

"dog|cat" -> Animal;Since it is tedious to create Animal annotations by matching on different regular expression, we apply an external dictionary in the next example. The first line defines a word list named “AnimalsList”, which is located in the resource folder (the file “Animals.txt” contains one animal name in each line). After the declaration of the type, a rule uses this word list to find all occurrences of animals in the complete document.

WORDLIST AnimalsList = 'Animals.txt';

DECLARE Animal;

Document{-> MARKFAST(Animal, AnimalsList)};The matching condition of the rule element refers to the complete document, or more specific to the annotation of the type “DocumentAnnotation”, which covers the whole document. The action “MARKFAST” of this rule element creates an annotation of the type “Animal” for each found entry of the dictionary “AnimalsList”.

The next example introduces rules with more than one rule element, whereby one of them is a composed rule element. The following rule tries to annotate occurrences of animals separated by commas, e.g., “dog, cat, bird”.

DECLARE AnimalEnum;

(Animal COMMA)+{-> MARK(AnimalEnum,1,2)} Animal;The rule consists of two rule elements, with “(Animal COMMA)+{→ MARK(AnimalEnum,1,2)}” being the first rule element and “Animal” the second one. Let us take a closer look at the first rule element. This rule element is actually composed of two normal rule elements, that are “Animal” and “COMMA”, and contains a greedy quantifier and one action. This rule element, therefore, matches on one Animal annotation and a following comma. This is repeated until one of the inner rule elements does not match anymore. Then, there has to be another Animal annotation afterwards, specified by the second rule element of the rule. In this case, the rule matches and its action is executed: The MARK action creates a new annotation of the type “AnimalEnum”. However, in contrast to the previous examples, this action also contains two numbers. These numbers refer to the rule elements that should be used to calculate the span of the created annotation. The numbers “1, 2” state that the new annotation should start with the first rule element, the composed one, and should end with the second rule element.

Let us make the composed rule element more complex. The following rule also matches on lists of animals, which are separated by semicolon. A disjunctive rule element is therefore added, indicated by the symbol “|”, which matches on annotations of the type “COMMA” or “SEMICOLON”.

(Animal (COMMA | SEMICOLON))+{-> MARK(AnimalEnum,1,2)} Animal;There two more special symbols that can be used to link rule elements.

If the symbol “|” is replaced by the symbol & in the last example, then the token after the animal need to be a comma and a semicolon, which is of course not possible.

Another symbol with a special meaning is “%”, which cannot only be used within a composed rule element (parentheses). This symbol can be interpreted as a global “and”: It links several rules, which only fire, if all rules have successfully matched.

In the following example, an annotation of the type “FoundIt” is created, if the document contains two periods in a row and two commas in a row:

PERIOD PERIOD % COMMA COMMA{-> FoundIt};There is a “wild card” (“#”) rule element, which can be used to skip some text or annotations until the next rule element is able to match.

DECLARE Sentence;

PERIOD #{-> MARK(Sentence)} PERIOD;This rule annotates everything between two “PERIOD” annotations with the type “Sentence”. Please note that the resulting annotations is automatically trimmed using the current filtering settings. Conditions at wild card rule elements should by avoided and only be used by advanced users.

Another special rule element is called “optional” (“_”). Sometimes, an annotation should be created on a text position if it is not followed by an annotation of a specific property. In contrast to normal rule elements with optional quantifier, the optional rule element does not need to match at all.

W ANY{-PARTOF(NUM)};

W _{-PARTOF(NUM)};The two rules in this example specify the same pattern: A word that is not followed by a number. The difference between the rules shows itself at the border of the matching window, e.g., at the end of the document. If the document contains only a single word, the first rule will not match successfully because the second rule element already fails at its matching condition. The second rule, however, will successfully match due to the optional rule element.

Rule elements can contain more then one condition. The rule in the next example tries to identify headlines, which are bold, underlined and end with a colon.

DECLARE Headline;

Paragraph{CONTAINS(Bold, 90, 100, true),

CONTAINS(Underlined, 90, 100, true), ENDSWITH(COLON)

-> MARK(Headline)};The matching condition of this rule element is given with the type “Paragraph”, thus the rule takes a look at all Paragraph annotations. The rule matches only if the three conditions, separated by commas, are fulfilled. The first condition “CONTAINS(Bold, 90, 100, true)” states that 90%-100% of the matched paragraph annotation should also be annotated with annotations of the type “Bold”. The boolean parameter “true” indicates that amount of Bold annotations should be calculated relatively to the matched annotation. The two numbers “90,100” are, therefore, interpreted as percent amounts. The exact calculation of the coverage is dependent on the tokenization of the document and is neglected for now. The second condition “CONTAINS(Underlined, 90, 100, true)” consequently states that the paragraph should also contain at least 90% of annotations of the type “underlined”. The third condition “ENDSWITH(COLON)” finally forces the Paragraph annotation to end with a colon. It is only fulfilled, if there is an annotation of the type “COLON”, which has an end offset equal to the end offset of the matched Paragraph annotation.

The readability and maintenance of rules does not increase, if more conditions are added. One of the strengths of the UIMA Ruta language is that it provides different approaches to solve an annotation task. The next two examples introduce actions for transformation-based rules.

Headline{-CONTAINS(W) -> UNMARK(Headline)};This rule consists of one condition and one action. The condition “-CONTAINS(W)” is negated (indicated by the character “-”), and is therefore only fulfilled, if there are no annotations of the type “W” within the bound of the matched Headline annotation. The action “UNMARK(Headline)” removes the matched Headline annotation. Put into simple words, headlines that contain no words at all are not headlines.

The next rule does not remove an annotation, but changes its offsets dependent on the context.

Headline{-> SHIFT(Headline, 1, 2)} COLON;Here, the action “SHIFT(Headline, 1, 2)” expands the matched Headline annotation to the next colon, if that Headline annotation is followed by a COLON annotation.

UIMA Ruta rules can contain arbitrary conditions and actions, which is illustrated by the next example.

DECLARE Month, Year, Date;

ANY{INLIST(MonthsList) -> MARK(Month), MARK(Date,1,3)}

PERIOD? NUM{REGEXP(".{2,4}") -> MARK(Year)};This rule consists of three rule elements. The first one matches on every token, which has a covered text that occurs in a word lists named “MonthsList”. The second rule element is optional and does not need to be fulfilled, which is indicated by the quantifier “?”. The last rule element matches on numbers that fulfill the regular expression “REGEXP(".{2,4}"” and are therefore at least two characters to a maximum of four characters long. If this rule successfully matches on a text passage, then its three actions are executed: An annotation of the type “Month” is created for the first rule element, an annotation of the type “Year” is created for the last rule element and an annotation of the type “Date” is created for the span of all three rule elements. If the word list contains the correct entries, then this rule matches on strings like “Dec. 2004”, “July 85” or “11.2008” and creates the corresponding annotations.

After introducing the composition of rule elements, the default matching strategy is examined. The two rules in the next example create an annotation for a sequence of arbitrary tokens with the only difference of one condition.

DECLARE Text1, Text2;

ANY+{ -> MARK(Text1)};

ANY+{-PARTOF(Text2) -> MARK(Text2)};The first rule matches on each occurrence of an arbitrary token and continues this until the end of the document is reached. This is caused by the greedy quantifier “+”. Note that this rule considers each occurrence of a token and is therefore executed for each token resulting many overlapping annotations. This behavior is illustrated with an example: When applied on the document “Peter works for Frank”, the rule creates four annotations with the covered texts “Peter works for Frank”, “works for Frank”, “for Frank” and “Frank”. The rule first tries to match on the token “Peter” and continues its matching. Then, it tries to match on the token “works” and continues its matching, and so on.

In this example, the second rule only returns one annotation, which covers the complete document. This is caused by the additional condition “-PARTOF(Text2)”. The PARTOF condition is fulfilled, if the matched annotation is located within an annotation of the given type, or put in simple words, if the matched annotation is part of an annotation of the type “Text2”. When applied on the document “Peter works for Frank”, the rule matches on the first token “Peter”, continues its match and creates an annotation of the type “Text2” for the complete document. Then it tries to match on the second token “works”, but fails, because this token is already part of an Text2 annotation.

UIMA Ruta rules can not only be used to create or modify annotations, but also to create features for annotations. The next example defines and assigns a relation of employment, by storing the given annotations as feature values.

DECLARE Annotation EmplRelation

(Employee employeeRef, Employer employerRef);

Sentence{CONTAINS(EmploymentIndicator) -> CREATE(EmplRelation,

"employeeRef" = Employee, "employerRef" = Employer)};The first statement of this example is a declaration that defines a new type of annotation named “EmplRelation”. This annotation has two features: One feature with the name “employeeRef” of the type “Employee” and one feature with the name “employerRef” of the type “Employer”. If the parent type is Annotation, then it can be omitted resulting in the following declaration:

DECLARE EmplRelation (Employee employeeRef, Employer employerRef);The second statement of the example, which is a simple rule, creates one annotation of the type “EmplRelation” for each Sentence annotation that contains at least one annotation of the type “EmploymentIndicator”. Additionally to creating an annotation, the CREATE action also assigns an annotation of the “Employee”, which needs to be located within the span of the matched sentence, to the feature “employeeRef” and an Employer annotation to the feature “employerRef”. The annotations mentioned in this example need to be present in advance.

In order to refer to annotations and, for example, assigning them to some features, special kinds of local and global variables can be utilized. Local variables for annotations do not need to be defined by are specified by a label at a rule element. This label can be utilized for referring to the matched annotation of this rule element within the current rule match alone. The following example illustrate some simple use cases using local variables:

DECLARE Annotation EmplRelation

(Employee employeeRef, Employer employerRef);

e1:Employer # EmploymentIndicator # e2:Employee)

{-> EmplRelation, EmplRelation.employeeRef=e2,

EmplRelation.employerRef=e1};Global variables for annotations are declared like other variables and are able to store annotations across rules as illustrated by the next example:

DECLARE MentionedAfter(Annotation first);

ANNOTATION firstPerson;

# p:Person{-> firstPerson = p};

Entity{-> MentionedAfter, MentionedAfter.first = firstPerson};The first line declares a new type that are utilized afterwards.

The second line defines a variable named firstPerson which can store one annotation.

A variable able to hold several annotations is defined with ANNOTATIONLIST.

The next line assigns the first occurrence of Person annotation to the annotation variable firstPerson.

The last line creates an annotation of the type MentionedAfter and assigns the value of the variable firstPerson to the feature first of the created annotation.

Expressions for annotations can be extended by a feature match and also conditions. This does also apply for type expressions that represent annotations. This functionality is illustrated with a simple example:

Sentence{-> CREATE(EmplRelation, "employeeRef" =

Employee.ct=="Peter"{ENDSWITH(Sentence)})};Here, an annotation of the type EmplRelation is created for each sentence.

The feature employeeRef is filled with one Employee annotation.

This annotation is specified by its type Employee.

The first annotation of this type within the matched sentence, which covers the text “Peter” and also ends with a Sentence annotation, is selected.

Sometimes, an annotation which was just created by an action should be assigned to a feature. This can be achieved by referring to the annotation given its type like it was shown in the first example with “EmplRelation”. However, this can cause problems in situations, e.g. where several annotation of a type are present at a specific span. Local variables using labels can also be used directly at actions, which create or modify actions. The action will assign the new annotation the the label variable, which can then be utilized by following actions as shown in the following example:

W.ct=="Peter"{-> e:Employee, CREATE(EmplRelation, "employeeRef" = e)};In the last examples, the values of features were defined as annotation types. However, also primitive types can be used, as will be shown in the next example, together with a short introduction of variables.

DECLARE Annotation MoneyAmount(STRING currency, INT amount);

INT moneyAmount;

STRING moneyCurrency;

NUM{PARSE(moneyAmount)} SPECIAL{REGEXP("€") -> MATCHEDTEXT(moneyCurrency),

CREATE(MoneyAmount, 1, 2, "amount" = moneyAmount,

"currency" = moneyCurrency)};First, a new annotation with the name “MoneyAmount” and two features are defined, one string feature and one integer feature. Then, two UIMA Ruta variables are declared, one integer variable and one string variable. The rule matches on a number, whose value is stored in the variable “moneyAmount”, followed by a special token that needs to be equal to the string “€”. Then, the covered text of the special annotation is stored in the string variable “moneyCurrency” and annotation of the type “MoneyAmount” spanning over both rule elements is created. Additionally, the variables are assigned as feature values.

Using feature expression for conditions and action, can reduce the complexity of a rule. The first rule in the following example set the value of the feature “currency” of the annotation of the type “MoneyAmount” to “Euro”, if it was “€” before. The second rule creates an annotation of the type “LessThan” for all annotations of the type “MoneyAmount”, if their amount is less than 100 and the currency is “Euro”.

DECLARE LessThan;

MoneyAmount.currency=="€"{-> MoneyAmount.currency="Euro"};

MoneyAmount{(MoneyAmount.amount<=100),

MoneyAmount.currency=="Euro" -> LessThan};UIMA Ruta script files with many rules can quickly confuse the reader. The UIMA Ruta language, therefore, allows to import other script files in order to increase the modularity of a project or to create rule libraries. The next example imports the rules together with all known types of another script file and executes that script file.

SCRIPT uima.ruta.example.SecondaryScript;

Document{-> CALL(SecondaryScript)};The script file with the name “SecondaryScript.ruta”, which is located in the package “uima/ruta/example”, is imported and executed by the CALL action on the complete document. The script needs to be located in the folder specified by the parameter scriptPaths, or in a corresponding package in the classpath. It is also possible to import script files of other UIMA Ruta projects, e.g., by adapting the configuration parameters of the UIMA Ruta Analysis Engine or by setting a project reference in the project properties of a UIMA Ruta project.

For simple rules that match on the complete document and only specify actions, a simplified syntax exists that omits the matching parts:

SCRIPT uima.ruta.example.SecondaryScript;

CALL(SecondaryScript);The types of important annotations of the application are often defined in a separate type system. The next example shows how to import those types.

TYPESYSTEM my.package.NamedEntityTypeSystem;

Person{PARTOF(Organization) -> UNMARK(Person)};The type system descriptor file with the name “NamedEntityTypeSystem.xml” located in the package “my/package” is imported. The descriptor needs to be located in a folder specified by the parameter descriptorPaths.

It is sometimes easier to express functionality with control structures known by programming languages rather than to engineer all functionality only with matching rules. The UIMA Ruta language provides the BLOCK element for some of these use cases. The UIMA Ruta BLOCK element starts with the keyword “BLOCK” followed by its name in parentheses. The name of a block has two purposes: On the one hand, it is easier to distinguish the block, if they have different names, e.g., in the explain perspective of the UIMA Ruta Workbench. On the other hand, the name can be used to execute this block using the CALL action. Hereby, it is possible to access only specific sets of rules of other script files, or to implement a recursive call of rules. After the name of the block, a single rule element is given, which has curly parentheses, even if no conditions or actions are specified. Then, the body of the block is framed by curly brackets.

BLOCK(English) Document{FEATURE("language", "en")} {

// rules for english documents

}

BLOCK(German) Document{FEATURE("language", "de")} {

// rules for german documents

}This example contains two simple BLOCK statements. The rules defined within the block are only executed, if the condition in the head of the block is fulfilled. The rules of the first block are only considered if the feature “language” of the document annotation has the value “en”. Following this, the rules of the second block are only considered for German documents.

The rule element of the block definition can also refer to other annotation types than “Document”. While the last example implemented something similar to an if-statement, the next example provides a show case for something similar to a for-each-statement.

DECLARE SentenceWithNoLeadingNP;

BLOCK(ForEach) Sentence{} {

Document{-STARTSWITH(NP) -> MARK(SentenceWithNoLeadingNP)};

}Here, the rule in the block statement is performed for each occurrence of an annotation of the type “Sentence”. The rule within the block matches on the complete document, which is the current sentence in the context of the block statement. As a consequence, this example creates an annotation of the type “SentenceWithNoLeadingNP” for each sentence that does not start with a NP annotation.

There are two more language constructs (-> and <-) that allow to apply rules within a certain context.

These rules are added to an arbitrary rule element and are called inlined rules.

The first example interprets the inlined rules as actions.

They are executed if the surrounding rule was able to match, which makes this one very similar to the block statement.

DECLARE SentenceWithNoLeadingNP;

Sentence{}->{

Document{-STARTSWITH(NP) -> SentenceWithNoLeadingNP};

};The second one (<-`) interprets the inlined rules as conditions. The surrounding rule can only match if at least one inlined rule was successfully applied. In the following example, a sentence is annotated with the type SentenceWithNPNP, if there are two successive NP annotations within this sentence.

DECLARE SentenceWithNPNP;

Sentence{-> SentenceWithNPNP}<-{

NP NP;

};A rule element may be extended with several inlined rule block as condition or action. If there a more than one inlined rule blocks as condition, each needs to contain at least one rule that was successfully applied. In the following example, the rule will one match if the sentence contains a number followed by a another number and a period followed by a comma, independently from their location within the sentence:

Sentence<-{NUM NUM;}<-{PERIOD COMMA;};Let us take a closer look on what exactly the UIMA Ruta rules match. The following rule matches on a word followed by another word:

W W;To be more precise, this rule matches on all documents like Apache UIMA, ApacheUIMA, Apache <b>UIMA</b>`.

There are two main reasons for this: First of all, it depends on how the available annotations are defined.

The default seeder for the initial annotations creates an annotation for all characters until an upper case character occurs.

Thus, the string “ApacheUIMA” consists of two tokens.

However, more important, the UIMA Ruta language provides a concept of visibility of the annotations.

By default, all annotations of the types “SPACE”, “NBSP”, “BREAK” and “MARKUP” (whitespace and XML elements) are filtered and not visible.

This holds of course for their covered text, too.

The rule elements skip all positions of the document where those annotations occur.

The rule in the last example matches on all examples.

Without the default filtering settings, with all annotations set to visible, the rule matches only on the document “ApacheUIMA” since it is the only one that contains two word annotations without any whitespace between them.

The filtering setting can also be modified by the UIMA Ruta rules themselves. The next example provides rules that extend and limit the amount of visible text of the document.

Sentence;

Document{-> RETAINTYPE(SPACE)};

Sentence;

Document{-> FILTERTYPE(CW)};

Sentence;

Document{-> RETAINTYPE, FILTERTYPE};The first rule matches on sentences, which do not start with any filtered type. Sentences that start with whitespace or markup, for example, are not considered. The next rule retains all text that is covered by annotations of the type “SPACE” meaning that the rule elements are now sensible to whitespaces. The following rule will, therefore, match on sentences that start with whitespaces. The third rule now filters the type “CW” with the consequence that all capitalized words are invisible. If the following rule now wants to match on sentences, then this is only possible for Sentence annotations that do not start with a capitalized word. The last rule finally resets the filtering setting to the default configuration in the UIMA Ruta Analysis Engine.

The next example gives a showcase for importing external Analysis Engines and for modifying the documents by creating a new view called “modified”. Additional Analysis Engines can be imported with the keyword “ENGINE” followed by the name of the descriptor. These imported Analysis Engines can be executed with the actions “CALL” or “EXEC”. If the executed Analysis Engine adds, removes or modifies annotations, then their types need to be mentioned when calling the descriptor, or else these annotations will not be correctly processed by the following UIMA Ruta rules.

ENGINE utils.Modifier;

Date{-> DEL};

MoneyAmount{-> REPLACE("<MoneyAmount/>")};

Document{-> COLOR(Headline, "green")};

Document{-> EXEC(Modifier)};In this example, we first import an Analysis Engine defined by the descriptor “Modifier.xml” located in the folder “utils”.

The descriptor needs to be located in the folder specified by the parameter descriptorPaths.

The first rule deletes all text covered by annotations of the type “Date”.

The second rule replaces the text of all annotations of the type “MoneyAmount” with the string <MoneyAmount/>.

The third rule remembers to set the background color of text in Headline annotation to green.

The last rule finally performs all of these changes in an additional view called “modified”, which is specified in the configuration parameters of the analysis engine. Section 1.5.4 and Section 2.17 provide a more detailed description.

In the last example, a descriptor file was loaded in order to import and apply an external analysis engine. Analysis engines can also be loaded using uimaFIT, whereas the given class name has to be present in the classpath. In the UIMA Ruta Workbench, you can add a dependency to a java project, which contains the implementation, to the UIMA Ruta project. The following example loads an analysis engine without an descriptor and applies it on the document. The additional list of types states that the annotations of those types created by the analysis engine should be available to the following Ruta rules.

UIMAFIT my.package.impl.MyAnalysisEngine;

Document{-> EXEC(MyAnalysisEngine, {MyType1, MyType2})};1.5. UIMA Analysis Engines

This section gives an overview of the UIMA Analysis Engines shipped with UIMA Ruta. The most important one is “RutaEngine”, a generic analysis engine, which is able to interpret and execute script files. The other analysis engines provide support for some additional functionality or add certain types of annotations.

1.5.1. Ruta Engine

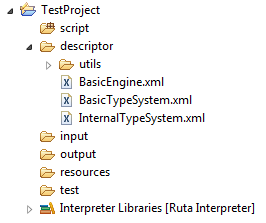

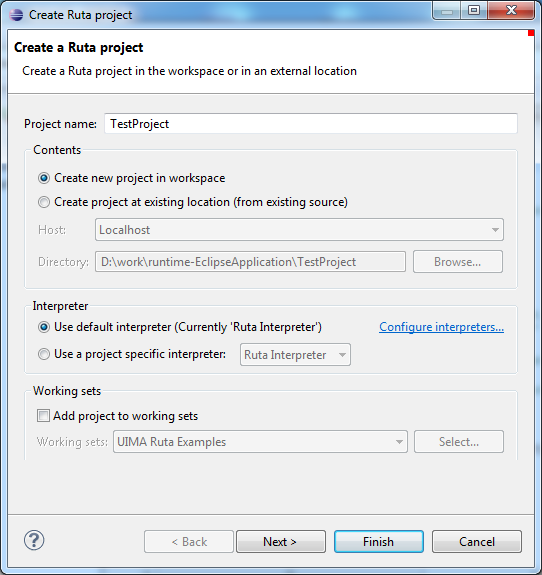

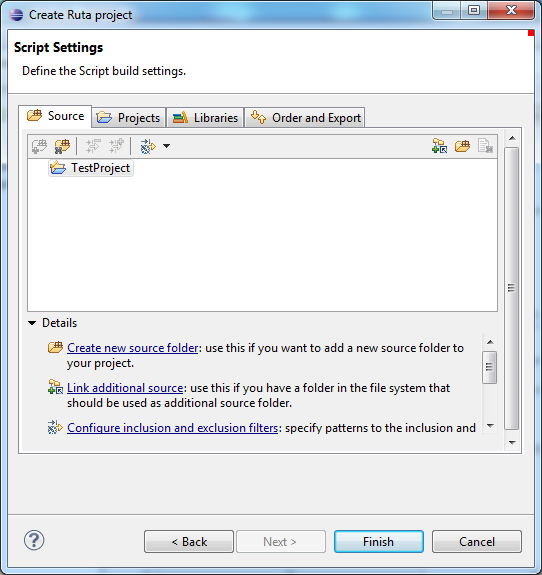

This generic Analysis Engine is the most important one for the UIMA Ruta language since it is responsible for applying the UIMA Ruta rules on a CAS. Its functionality is configured by the configuration parameters, which, for example, specify the rule file that should be executed. In the UIMA Ruta Workbench, a basic template named “BasicEngine.xml” is given in the descriptor folder of a UIMA Ruta project and correctly configured descriptors typically named “MyScriptEngine.xml” are generated in the descriptor folder corresponding to the package namespace of the script file. The available configuration parameters of the UIMA Ruta Analysis Engine are described in the following.

Configuration Parameters

The configuration parameters of the UIMA Ruta Analysis Engine can be subdivided into three different groups: parameters for the setup of the environment (mainScript to additionalExtensions), parameters that change the behavior of the analysis engine (reloadScript to simpleGreedyForComposed) and parameters for creating additional information how the rules were executed (debug to createdBy). First, a short overview of the configuration parameters is given in Table 1. Afterwards, all parameters are described in detail with examples.

To change the value of any configuration parameter within a UIMA Ruta script, the CONFIGURE action (see Section 2.8.8) can be used. For changing behavior of dynamicAnchoring the DYNAMICANCHORING action (see Section 2.8.11) is recommended.

| Name | Short description | Type |

|---|---|---|

Name with complete namespace of the script which will be interpreted and executed by the analysis engine. |

Single String |

|

Script (list of rules) to be applied. |

Single String |

|

This parameter specifies the name of the non-existing script if the parameter 'rules' is used. |

Single String |

|

Encoding of all UIMA Ruta script files. |

Single String |

|

List of absolute locations, which contain the necessary script files like the main script. |

Multi String |

|

List of absolute locations, which contain the necessary descriptor files like type systems. |

Multi String |

|

List of absolute locations, which contain the necessary resource files like word lists. |

Multi String |

|

Optional list of names with complete namespace of additional scripts, which can be referred to. |

Multi String |

|

Optional list of names with complete namespace of additional analysis engines, which can be called by UIMA Ruta rules. |

Multi String |

|

Optional list of class names with complete namespace of additional uimaFIT analysis engines, which can be called by UIMA Ruta rules. |

Multi String |

|

List of factory classes for additional extensions of the UIMA Ruta language like proprietary conditions. |

Multi String |

|

Option to initialize the rule script each time the analysis engine processes a CAS. |

Single Boolean |

|

List of class names that provide additional annotations before the rules are executed. |

Multi String |

|

List of complete type names of annotations that are invisible by default. |

Multi String |

|

Option to remove all inference annotations after execution of the rule script. |

Single Boolean |

|

Option to select annotation types that should be indexed internally in ruta. |

Multi String |

|

Option to skip annotation types in the internal indexing. |

Multi String |

|

Option to index only mentioned types internally in ruta. |

Single Boolean |

|

Option to index types additionally to the mentioned ones internally in ruta. |

Multi String |

|

Option to select annotation types that should be reindexed internally in ruta. |

Multi String |

|

Option to skip annotation types in the internal reindexing. |

Multi String |

|

Option to reindex only mentioned types internally in ruta. |

Single Boolean |

|

Option to reindex types additionally to the mentioned ones internally in ruta. |

Multi String |

|

Mode how internal indexing should be applied. |

Single String |

|

Option to validate the internal indexing. |

Single String |

|

Option to define empty text positions as invisible. |

Single Boolean |

|

Option to extend the datapath by the descriptorPaths |

Single Boolean |

|

Option to restrict short type names resolution to those in the declared typesystems. |

Single Boolean |

|

Option to ignore types even if they are available in the typesystem/CAS. |

Single String |

|

Option to allow rule matches to start at any rule element. |

Single Boolean |

|

Option to decrease the memory consumption when processing a large CAS. |

Single Boolean |

|

Option to activate a different inferencer for composed rule elements. |

Single Boolean |

|

Option to add debug information to the CAS. |

Single Boolean |

|

Option to add information about the rule matches to the CAS. |

Single Boolean |

|

Option to add all debug information to the indexes. |

Single Boolean |

|

List of rule ids. If provided, then debug information is only created for those rules. |

Multi String |

|

Option to add profile information to the CAS. |

Single Boolean |

|

Option to add statistics of conditions and actions to the CAS. |

Single Boolean |

|

Option to add additional information, which rule created an annotation. |

Single Boolean |

|

String array with names of variables. Is used in combination with varValues. |

Multi String |

|

String array with values of variables. Is used in combination with varNames. |

Multi String |

|

Remove whitespaces when loading dictionaries. |

Single Boolean |

|

String/token to be used to split columns in CSV tables. |

Single String |

|

List of factory classes for additional inference visitors. |

Multi String |

|

Maximum amount of allowed matches of a single rule. |

Single Integer |

|

Maximum amount of allowed matches of a single rule element. |

Single Integer |

mainScript

This parameter specifies the rule file that will be executed by the analysis engine and is, therefore, one of the most important ones. The exact name of the script is given by the complete namespace of the file, which corresponds to its location relative to the given parameter scriptPaths. The single names of packages (or folders) are separated by periods. An exemplary value for this parameter could be "org.apache.uima.Main", whereas "Main" specifies the file containing the rules and "org.apache.uima" its package. In this case, the analysis engine loads the script file "Main.ruta", which is located in the folder structure "org/apache/uima/". This parameter has no default value and has to be provided, although it is not specified as mandatory.

rules

A String parameter representing the rule that should be applied by the analysis engine. If set, it replaces the content of file specified by the mainScript parameter.

rulesScriptName

This parameter specifies the name of the non-existing script if the rules parameter is used. The default value is 'Anonymous'.

scriptEncoding

This parameter specifies the encoding of the rule files. Its default value is "UTF-8".

scriptPaths

The parameter scriptPaths refers to a list of String values, which specify the possible locations of script files. The given locations are absolute paths. A typical value for this parameter is, for example, "C:/Ruta/MyProject/script/". If the parameter mainScript is set to org.apache.uima.Main, then the absolute path of the script file has to be "C:/Ruta/MyProject/script/org/apache/uima/Main.ruta". This parameter can contain multiple values, as the main script can refer to multiple projects similar to a class path in Java.

descriptorPaths

This parameter specifies the possible locations for descriptors like analysis engines or type systems, similar to the parameter scriptPaths for the script files. A typical value for this parameter is for example "C:/Ruta/MyProject/descriptor/". The relative values of the parameter additionalEngines are resolved to these absolute locations. This parameter can contain multiple values, as the main script can refer to multiple projects similar to a class path in Java.

resourcePaths

This parameter specifies the possible locations of additional resources like word lists or CSV tables. The string values have to contain absolute locations, for example, "C:/Ruta/MyProject/resources/".

additionalScripts

The optional parameter additionalScripts is defined as a list of string values and contains script files, which are additionally loaded by the analysis engine. These script files are specified by their complete namespace, exactly like the value of the parameter mainScript and can be refered to by language elements, e.g., by executing the containing rules. An exemplary value of this parameter is "org.apache.uima.SecondaryScript". In this example, the main script could import this script file by the declaration "SCRIPT org.apache.uima.SecondaryScript;" and then could execute it with the rule "Document{→ CALL(SecondaryScript)};". This optional list can be used as a replacement of global imports in the script file.

additionalEngines

This optional parameter contains a list of additional analysis engines, which can be executed by the UIMA Ruta rules. The single values are given by the name of the analysis engine with their complete namespace and have to be located relative to one value of the parameter descriptorPaths, the location where the analysis engine searches for the descriptor file. An example for one value of the parameter is "utils.HtmlAnnotator", which points to the descriptor "HtmlAnnotator.xml" in the folder "utils". This optional list can be used as a replacement of global imports in the script file.

additionalUimafitEngines

This optional parameter contains a list of additional analysis engines, which can be executed by the UIMA Ruta rules. The single values are given by the name of the implementation with the complete namespace and have to be present int he classpath of the application. An example for one value of the parameter is "org.apache.uima.ruta.engine.HtmlAnnotator", which points to the "HtmlAnnotator" class. This optional list can be used as a replacement of global imports in the script file.

additionalExtensions

This parameter specifies optional extensions of the UIMA Ruta language. The elements of the string list have to implement the interface "org.apache.uima.ruta.extensions.IRutaExtension". With these extensions, application-specific conditions and actions can be added to the set of provided ones.

reloadScript

This boolean parameter indicates whether the script or resource files should be reloaded when processing a CAS. The default value is set to false. In this case, the script files are loaded when the analysis engine is initialized. If script files or resource files are extended, e.g., a dictionary is filled yet when a collection of documents are processed, then the parameter is needed to be set to true in order to include the changes.

seeders

This list of string values refers to implementations of the interface "org.apache.uima.ruta.seed.RutaAnnotationSeeder", which can be used to automatically add annotations to the CAS. The default value of the parameter is a single seeder, namely "org.apache.uima.ruta.seed.TextSeeder" that adds annotations for token classes like CW, NUM and SEMICOLON, but not MARKUP. Remember that additional annotations can also be added with an additional engine that is executed by a UIMA Ruta rule.

defaultFilteredTypes

This parameter specifies a list of types, which are filtered by default when executing a script file. Using the default values of this parameter, whitespaces, line breaks and markup elements are not visible to Ruta rules. The visibility of annotations and, therefore, the covered text can be changed using the actions FILTERTYPE and RETAINTYPE.

removeBasics

This parameter specifies whether the inference annotations created by the analysis engine should be removed after processing the CAS. The default value is set to false.

indexOnly

This parameter specifies the annotation types which should be indexed for ruta’s internal annotations. All annotation types that are relevant need to be listed here. The value of this parameter needs only be adapted for performance and memory optimization in pipelines that contains several ruta analysis engines. Default value is uima.tcas.Annotation

indexSkipTypes

This parameter specifies annotation types that should not be indexed at all. These types normally include annotations that provide no meaningful semantics for text processing, e.g., types concerning ruta debug information.

indexOnlyMentionedTypes

If this parameter is activated, then only annotations of types are internally indexed that are mentioned with in the rules. This optimization of the internal indexing can improve the speed and reduce the memory footprint. However, several features of the rule matching require the indexing of types that are not mentioned in the rules, e.g., literal rule matches, wildcards and actions like MARKFAST, MARKTABLE, TRIE. Default value is false.

indexAdditionally

This parameter specifies annotation types that should be index additionally to types mentioned in the rules. This parameter is only used if the parameter 'indexOnlyMentionedTypes' is activated.

reindexOnly

This parameter specifies the annotation types which should be reindexed for ruta’s internal annotations All annotation types that changed since the last call of a ruta script need to be listed here. The value of this parameter needs only be adapted for performance optimization in pipelines that contains several ruta analysis engines. Default value is uima.tcas.Annotation

reindexSkipTypes

This parameter specifies annotation types that should not be reindexed. These types normally include annotations that are added once and are not changed in the following pipeline, e.g., Tokens or TokenSeed (like CW).

reindexOnlyMentionedTypes

If this parameter is activated, then only annotations of types are internally reindexed at beginning that are mentioned with in the rules. This parameter overrides the values of the parameter 'reindexOnly' with the types that are mentioned in the rules. Default value is false.

reindexAdditionally

This parameter specifies annotation types that should be reindexed additionally to types mentioned in the rules. This parameter is only used if the parameter 'reindexOnlyMentionedTypes' is activated.

indexUpdateMode

This parameter specifies the mode for updating the internal indexing in RutaBasic annotations. This is a technical parameter for optimizing the runtime performance/speed of RutaEngines. Available modes are: COMPLETE, ADDITIVE, SAFE_ADDITIVE, NONE. Default value is ADDITIVE.

validateInternalIndexing

Option to validate the internal indexing in RutaBasic with the current CAS after the indexing and reindexing is performed. Annotations that are not correctly indexing in RutaBasics cause Exceptions. Annotations of types listed in parameter 'indexSkipTypes' and 'reindexSkipTypes' are ignored. Default value is false.

emptyIsInvisible

This parameter determines positions as invisible if the internal indexing of the corresponding RutaBasic annotation is empty. Default value is true.

modifyDataPath

This parameter specifies whether the datapath of the ResourceManager is extended by the values of the configuration parameter descriptorPaths.

The default value is set to false.

strictImports

This parameter specifies whether short type names should be resolved against the typesystems declared in the script (true) or at runtime in the CAS typesystem (false). The default value is set to false.

typeIgnorePattern

An optional pattern (regular expression) which defined types that should be ignored. These types will not be resolved even if strictImports is set to false. This parameter can be used to ignore complete namespaces of type that could contain ambiguous short names.

dynamicAnchoring

If this parameter is set to true, then the Ruta rules are not forced to start to match with the first rule element. Rather, the rule element referring to the most rare type is chosen. This option can be utilized to optimize the performance. Please mind that the matching result can vary in some cases when greedy rule elements are applied. The default value is set to false.

lowMemoryProfile

This parameter specifies whether the memory consumption should be reduced. This parameter should be set to true for very large CAS documents (e.g., > 500k tokens), but it also reduces the performance. The default value is set to false.

simpleGreedyForComposed

This parameter specifies whether a different inference strategy for composed rule elements should be applied. This option is only necessary when the composed rule element is expected to match very often, e.g., a rule element like (ANY ANY)+. The default value of this parameter is set to false.

debug

If this parameter is set to true, then additional information about the execution of a rule script is added to the CAS. The actual information is specified by the following parameters. The default value of this parameter is set to false.

debugWithMatches

This parameter specifies whether the match information (covered text) of the rules should be stored in the CAS. The default value of this parameter is set to false.

debugAddToIndexes

This parameter specifies whether all debug annotation should be added to the indexes. By default this parameter is deactivated and only the root script apply is added.

debugOnlyFor

This parameter specifies a list of rule-ids that enumerate the rule for which debug information should be created. No specific ids are given by default.

profile

If this parameter is set to true, then additional information about the runtime of applied rules is added to the CAS. The default value of this parameter is set to false.

statistics

If this parameter is set to true, then additional information about the runtime of UIMA Ruta language elements like conditions and actions is added to the CAS. The default value of this parameter is set to false.

createdBy

If this parameter is set to true, then additional information about what annotation was created by which rule is added to the CAS. The default value of this parameter is set to false.

varNames

This parameter specifies the names of variables and is used in combination with the parameter varValues, which contains the values of the corresponding variables. The n-th entry of this string array specifies the variable of the n-th entry of the string array of the parameter varValues. If the variables is defined in the root of a script, then the name of the variable suffices. If the variable is defined in a BLOCK or imported script, then the the name must contain the namespaces of the blocks as a prefix, e.g., InnerBlock.varName or OtherScript.SomeBlock.varName.

varValues

This parameter specifies the values of variables as string values in an string array.

It is used in combination with the parameter varNames, which contains the names of the corresponding variables.

The n-th entry of this string array specifies the value of the n-th entry of the string array of the parameter varNames.

The values for list variables are separated by the character ,.

Thus, the usage of commas is not allowed if the variable is a list.

dictRemoveWS

If this parameter is set to true, then whitespaces are removed when dictionaries are loaded. The default is set to "true".

csvSeparator

If this parameter is set to any String value then this String/token is used to split columns in CSV tables. The default is set to ';'.

1.5.2. Annotation Writer

This Analysis Engine can be utilized to write the covered text of annotations in a text file, whereas each covered text is put into a new line. If the Analysis engine, for example, is configured for the type “uima.example.Person”, then all covered texts of all Person annotations are stored in a text file, one person in each line. A descriptor file for this Analysis Engine is located in the folder “descriptor/utils” of a UIMA Ruta project.

Configuration Parameters

Output

This string parameter specifies the absolute path of the resulting file named “output.txt”. However, if an annotation of the type “org.apache.uima.examples.SourceDocumentInformation” is given, then the value of this parameter is interpreted to be relative to the URI stored in the annotation and the name of the file will be adapted to the name of the source file. If this functionality is activated in the preferences, then the UIMA Ruta Workbench adds the SourceDocumentInformation annotation when the user launches a script file. The default value of this parameter is “/../output/”.

1.5.3. Plain Text Annotator

This Analysis Engines adds annotations for lines and paragraphs. A descriptor file for this Analysis Engine is located in the folder “descriptor/utils” of a UIMA Ruta project. There are no configuration parameters.

1.5.4. Modifier

The Modifier Analysis Engine can be used to create an additional view, which contains all textual modifications and HTML highlightings that were specified by the executed rules. This Analysis Engine can be applied, e.g., for anonymization where all annotations of persons are replaced by the string “Person”. Furthermore, the content of the new view can optionally be stored in a new HTML file. A descriptor file for this Analysis Engine is located in the folder “descriptor/utils” of a UIMA Ruta project.

Configuration Parameters

styleMap

This string parameter specifies the name of the style map file created by the Style Map Creator Analysis Engine, which stores the colors for additional highlightings in the modified view.

descriptorPaths

This parameter can contain multiple string values and specifies the absolute paths where the style map file can be found.

outputLocation

This optional string parameter specifies the absolute path of the resulting file named “output.modified.html”. However, if an annotation of the type “org.apache.uima.examples.SourceDocumentInformation” is given, then the value of this parameter is interpreted to be relative to the URI stored in the annotation and the name of the file will be adapted to the name of the source file. If this functionality is activated in the preferences, then the UIMA Ruta Workbench adds the SourceDocumentInformation annotation when the user launches a script file. The default value of this parameter is empty. In this case no additional html file will be created.

1.5.5. HTML Annotator

This Analysis Engine provides support for HTML files by adding annotations for the HTML elements.

Using the default values, the HTML Annotator creates annotations for each HTML element spanning the content of the element, whereas the most common elements are represented by own types.

The document This text is <b>bold</b>., for example, would be annotated with an annotation of the type “org.apache.uima.ruta.type.html.B” for the word “bold”.

The HTML annotator can be configured in order to include the start and end elements in the created annotations.

A descriptor file for this Analysis Engine is located in the folder “descriptor/utils” of a UIMA Ruta project.

1.5.6. HTML Converter

This Analysis Engine is able to convert html content from a source view into a plain string representation stored in an output view.

Especially, the Analysis Engine transfers annotations under consideration of the changed document text and annotation offsets in the new view.

The copy process also sets features, however, features of type annotation are currently not supported.

Note that if an annotation would have the same start and end positions in the new view, i.e., if it would be mapped to an annotation of length 0, it is not moved to the new view.

The HTML Converter also supports heuristic and explicit conversion patterns which default to html4 decoding, e.g., , <, etc.

Concepts like tables or lists are not supported.

Note that in general it is suggested to run an html cleaner before any further processing to avoid problems with malformed html.

A descriptor file for this Analysis Engine is located in the folder “descriptor/utils” of a UIMA Ruta project.

Configuration Parameters

outputView

This string parameter specifies the name of the new view. The default value is “plaintext”.

newlineInducingTags

This string array parameter sets the names of the html tags that create linebreaks in the output view. The default is “br, p, div, ul, ol, dl, li, h1, …, h6, blockquote”.

replaceLinebreaks

This boolean parameter determines if linebreaks inside the text nodes are kept or removed. The default behavior is “true”.

replaceLinebreaks

This string parameter determines the character sequence that replaces a linebreak. The default behavior is the empty string.

conversionPolicy

This string parameter determines the conversion policy used, either "heuristic", "explicit", or "none". When the value is "explicit", the parameters “conversionPatterns” and optionally “conversionReplacements” are considered.

The "heuristic" conversion policy uses simple regular expressions to decode html4 entities such as . The default behavior is "heuristic".

conversionPatterns

This string array parameter can be used to apply custom conversions.

It defaults to a list of commonly used codes, e.g., , which are converted using html 4 entity unescaping.

However, explicit conversion strings can also be passed via the parameter “conversionReplacements”.

Remember to enable explicit conversion via “conversionPolicy” first.

conversionReplacements

This string array parameter corresponds to “conversionPatterns” such that “conversionPatterns[i]” will be replaced by “conversionReplacements[i]”; replacements should be shorter than the source pattern. Per default, the replacement strings are computed using Html4 decoding. Remember to enable explicit conversion via “conversionPolicy” first.

skipWhitespaces

This boolean parameter determines if the converter should skip whitespaces. Html documents often contains whitespaces for indentation and formatting, which should not be reproduced in the converted plain text document. If the parameter is set to false, then the whitespaces are not removed. This behavior is useful, if not Html documents are converted, but XMl files. The default value is true.

processAll

If this boolean parameter is set to true, then the tags of the complete document is processed and not only those within the body tag.

newlineInducingTagRegExp

This string parameter contains a regular expression for HTML/XML elements. If the pattern matches, then the element will introduce a new line break similar to the element of the parameter “newlineInducingTags”.

gapInducingTags

This string array parameter sets the names of the html tags that create additional text in the output view. The actual string of the gap is defined by the parameter “gapText”.

1.5.7. Style Map Creator

This Analysis Engine can be utilized to create style map information, which is needed by the Modifier Analysis Engine in order to create highlighting for some annotations. Style map information can be created using the COLOR action. A descriptor file for this Analysis Engine is located in the folder “descriptor/utils” of a UIMA Ruta project.

1.5.8. Cutter

This Analysis Engine is able to cut the document of the CAS. Only the text covered by annotations of the specified type will be retained and all other parts of the documents will be removed. The offsets of annotations in the index will be updated, but not feature structures nested as feature values.

1.5.9. View Writer

This Analysis Engine is able to serialize the processed CAS to an XMI file whereas the the source and destination view can be specified A descriptor file for this Analysis Engine is located in the folder “descriptor/utils” of a UIMA Ruta project.

Configuration Parameters

output

This string parameter specifies the absolute path of the resulting file named “output.xmi”. However, if an annotation of the type “org.apache.uima.examples.SourceDocumentInformation” is given, then the value of this parameter is interpreted to be relative to the URI stored in the annotation and the name of the file will be adapted to the name of the source file. If this functionality is activated in the preferences, then the UIMA Ruta Workbench adds the SourceDocumentInformation annotation when the user launches a script file.

1.5.10. XMI Writer

This Analysis Engine is able to serialize the processed CAS to an XMI file. One use case for the XMI Writer is, for example, a rule-based sort, which stores the processed XMI files in different folder, dependent on the execution of the rules, e.g., whether a pattern of annotations occurs or not. A descriptor file for this Analysis Engine is located in the folder “descriptor/utils” of a UIMA Ruta project.

Configuration Parameters

Output

This string parameter specifies the absolute path of the resulting file named “output.xmi”. However, if an annotation of the type “org.apache.uima.examples.SourceDocumentInformation” is given, then the value of this parameter is interpreted to be relative to the URI stored in the annotation and the name of the file will be adapted to the name of the source file. If this functionality is activated in the preferences, then the UIMA Ruta Workbench adds the SourceDocumentInformation annotation when the user launches a script file. The default value is “/../output/”

2. Apache UIMA Ruta Language

This chapter provides a complete description of the Apache UIMA Ruta language.

2.1. Syntax

UIMA Ruta defines its own language for writing rules and rule scripts. This section gives a formal overview of its syntax.

Structure: The overall structure of a UIMA Ruta script is defined by the following syntax.

Script -> PackageDeclaration? GlobalStatements Statements

PackageDeclaration -> "PACKAGE" DottedIdentifier ";"

GlobalStatments -> GlobalStatement*

GlobalStatment -> ("SCRIPT" | "ENGINE")

DottedIdentifier2 ";"

| UimafitImport | ImportStatement

UimafitImport -> "UIMAFIT" DottedIdentifier2

("(" DottedIdentifier2

(COMMA DottedIdentifier2)+ ")")?;

Statements -> Statement*

Statement -> Declaration | VariableDeclaration

| BlockDeclaration | SimpleStatement ";"Comments are excluded from the syntax definition. Comments start with "//" and always go to the end of the line.

Syntax of import statements:

ImportStatement -> (ImportType | ImportPackage | ImportTypeSystem) ";"

ImportType -> "IMPORT" Type ("FROM" Typesystem)?

("AS" Alias)?

ImportPackage -> "IMPORT" "PAKAGE" Package ("FROM" Typesystem)?

("AS" Alias)?

ImportTypeSystem -> "IMPORT" "PACKAGE" "*" "FROM" TypeSystem ("AS" Alias)?

| "IMPORT" "*" "FROM" Typesystem

| "TYPESYSTEM" Typesystem

Type -> DottedIdentifier

Package -> DottedIdentifier

TypeSystem -> DottedIdentifier2

Alias -> IdentifierExample beginning of a UIMA Ruta file:

PACKAGE uima.ruta.example;

// import the types of this type system

// (located in the descriptor folder -> types folder)

IMPORT * FROM types.BibtexTypeSystem;

SCRIPT uima.ruta.example.Author;

SCRIPT uima.ruta.example.Title;

SCRIPT uima.ruta.example.Year;Syntax of declarations:

Declaration -> "DECLARE" (AnnotationType)? Identifier ("," Identifier )*

| "DECLARE" AnnotationType? Identifier ( "("

FeatureDeclaration ")" )?

FeatureDeclaration -> ( (AnnotationType | "STRING" | "INT" | "FLOAT"

"DOUBLE" | "BOOLEAN") Identifier) )+

VariableDeclaration -> (("TYPE" Identifier ("," Identifier)*

("=" AnnotationType)?)

| ("STRING" Identifier ("," Identifier)*

("=" StringExpression)?)

| (("INT" | "DOUBLE" | "FLOAT") Identifier

("," Identifier)* ("=" NumberExpression)?)

| ("BOOLEAN" Identifier ("," Identifier)*

("=" BooleanExpression)?)

| ("ANNOTATION" Identifier("="AnnotationExpression)?)

| ("WORDLIST" Identifier ("=" WordListExpression

| StringExpression)?)

| ("WORDTABLE" Identifier ("=" WordTableExpression

| StringExpression)?)

| ("TYPELIST" Identifier ("=" TypeListExpression)?)

| ("STRINGLIST" Identifier

("=" StringListExpression)?)

| (("INTLIST" | "DOUBLELIST" | "FLOATLIST")

Identifier ("=" NumberListExpression)?)

| ("BOOLEANLIST" Identifier

("=" BooleanListExpression)?))

| ("ANNOTATIONLIST" Identifier

("=" AnnotationListExpression)?)

AnnotationType -> BasicAnnotationType | declaredAnnotationType

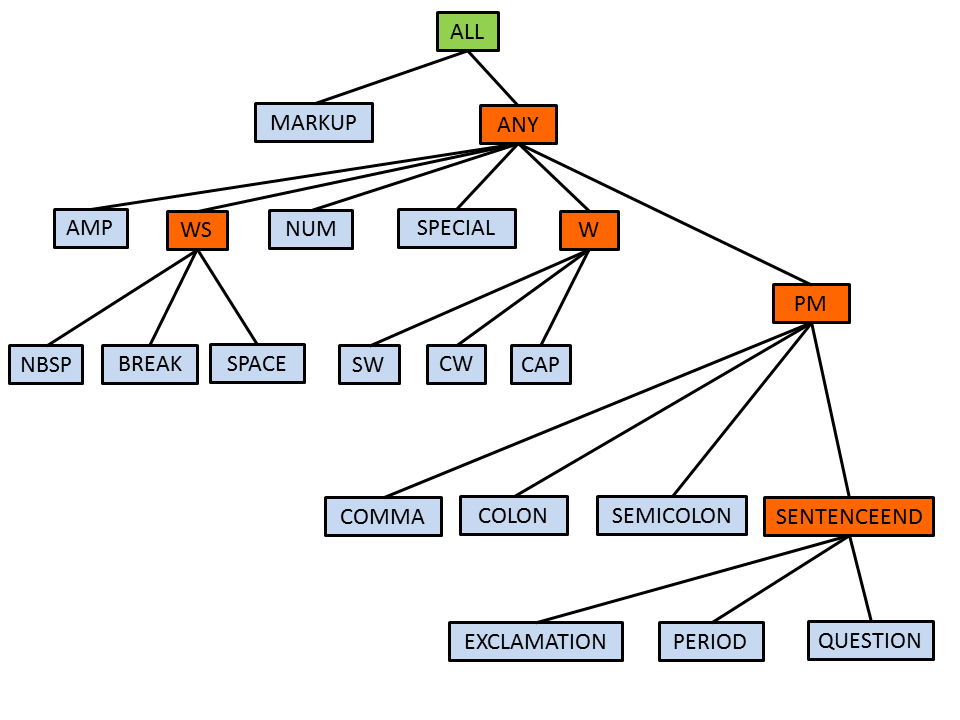

BasicAnnotationType -> ('COLON'| 'SW' | 'MARKUP' | 'PERIOD' | 'CW'| 'NUM'

| 'QUESTION' | 'SPECIAL' | 'CAP' | 'COMMA'

| 'EXCLAMATION' | 'SEMICOLON' | 'NBSP'| 'AMP' | '_'

| 'SENTENCEEND' | 'W' | 'PM' | 'ANY' | 'ALL'

| 'SPACE' | 'BREAK')

BlockDeclaration -> "BLOCK" "(" Identifier ")" RuleElementWithCA

"{" Statements "}"

actionDeclaration -> "ACTION" Identifier "(" ("VAR"? VarType

Identifier)? ("," "VAR"? VarType Identifier)*")"

"=" Action ( "," Action)* ";"

conditionDeclaration-> "CONDITION" Identifier "(" ("VAR"? VarType

Identifier)? ("," "VAR"? VarType Identifier)*")"

"=" Condition ( "," Condition)* ";"Syntax of statements and rule elements:

SimpleStatement -> SimpleRule | RegExpRule | ConjunctRules

| DocumentActionRule

SimpleRule -> RuleElements ";"

RegExpRule -> StringExpression "->" GroupAssignment

("," GroupAssignment)* ";"

ConjunctRules -> RuleElements ("%" RuleElements)+ ";"

DocumentActionRule -> Actions ";"

GroupAssignment -> TypeExpression

| NumberEpxression "=" TypeExpression

RuleElements -> RuleElement+

RuleElement -> (Identifier ":")? "@"?

RuleElementType | RuleElementLiteral

| RuleElementComposed | RuleElementWildCard

| RuleElementOptional

RuleElementType -> AnnotationTypeExpr OptionalRuleElementPart

RuleElementWithCA -> AnnotationTypeExpr ("{" Conditions?

Actions? "}")?

AnnotationTypeExpr -> (TypeExpression | AnnotationExpression

TypeListExpression | AnnotationListExpression)

(Operator)? Expression ("{" Conditions "}")?

FeatureMatchExpression -> TypeExpression ( "." Feature)+

( Operator (Expression | "null"))?

RuleElementLiteral -> SimpleStringExpression OptionalRuleElementPart

RuleElementComposed -> "(" RuleElement ("&" RuleElement)+ ")"

| "(" RuleElement ("|" RuleElement)+ ")"

| "(" RuleElements ")"

OptionalRuleElementPart

OptionalRuleElementPart-> QuantifierPart? ("{" Conditions? Actions? "}")?

InlinedRules?

InlinedRules -> ("<-" "{" SimpleStatement+ "}")*

("->" "{" SimpleStatement+ "}")*

RuleElementWildCard -> "#"("{" Conditions? Actions? }")? InlinedRules?

RuleElementOptional -> "_"("{" Conditions? Actions? }")? InlinedRules?

QuantifierPart -> "*" | "*?" | "+" | "+?" | "?" | "??"

| "[" NumberExpression "," NumberExpression "]"

| "[" NumberExpression "," NumberExpression "]?"

Conditions -> Condition ( "," Condition )*

Actions -> "->" (Identifier ":")? Action

( "," (Identifier ":")? Action)*Since each condition and each action has its own syntax, conditions and actions are described in their own section. For conditions see Section 2.7 , for actions see Section 2.8. The syntax of expressions is explained in Section 2.6.

It is also possible to use specific expression as implicit conditions or action additionally to the set of available conditions and actions.

Condition -> BooleanExpression | FeatureMatchExpression

Action -> TypeExpression | FeatureAssignmentExpression

| VariableAssignmentExpressionIdentifier:

DottedIdentifier -> Identifier ("." Identifier)*

DottedIdentifier2 -> Identifier (("."|"-") Identifier)*

Identifier -> letter (letter|digit)*2.2. Rule elements and their matching order

If not specified otherwise, then the UIMA Ruta rules normally start the matching process with their first rule element. The first rule element searches for possible positions for its matching condition and then will advise the next rule element to continue the matching process. For that reason, writing rules that contain a first rule element with an optional quantifier is discouraged and will result in ignoring the optional attribute of the quantifier.

The starting rule element can also be manually specified by adding “@” directly in front of the matching condition. In the following example, the rule first searches for capitalized words (CW) and then checks whether there is a period in front of the matched word.

PERIOD @CW;This functionality can also be used for rules that start with an optional rule element by manually specifying a later rule element to start the matching process.

The choice of the starting rule element can greatly influence the performance speed of the rule execution. This circumstance is illustrated with the following example that contains two rules, whereas already an annotation of the type “LastToken” was added to the last token of the document:

ANY LastToken;

ANY @LastToken;The first rule matches on each token of the document and checks whether the next annotation is the last token of the document. This will result in many index operations because all tokens of the document are considered. The second rule, however, matches on the last token and then checks if there is any token in front of it. This rule, therefore, considers only one token.

The UIMA Ruta language provides also a concept for automatically selecting the starting rule element called dynamic anchoring. Here, a simple heuristic concerning the position of the rule element and the involved types is applied in order to identify the favorable rule element. This functionality can be activated in the configuration parameters of the analysis engine or directly in the script file with the DYNAMICANCHORING action.

A list of rule elements normally specifies a sequential pattern.

The rule is able to match if the first rule element successfully matches and then the following rule element at the position after the match of the first rule element, and so on.

There are three language constructs that break up that sequential matching: &, “|” and “%”.

A composed rule element where all inner rule elements are linked by the symbol &

matches only if all inner rule elements successfully match at the given position.

A composed rule element with inner rule elements linked by the symbol “|” matches if one of the inner rule element successfully matches.

These composed rule elements therefore specify a conjunction (“and”) and a disjunction (“or”) of its rule element at the given position.

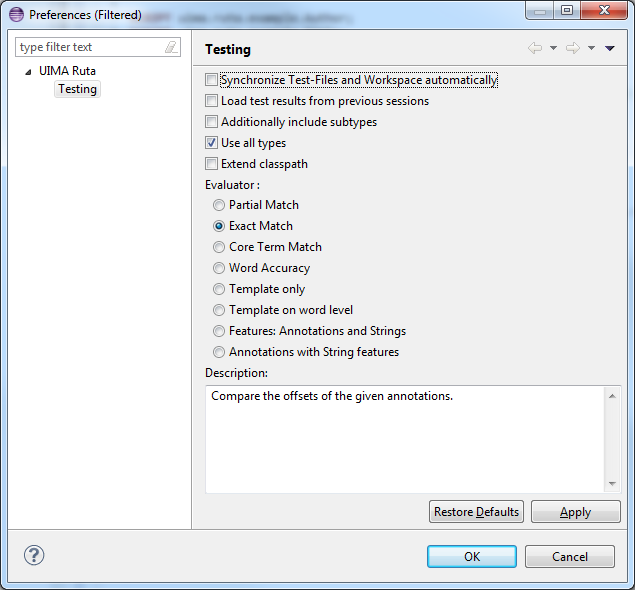

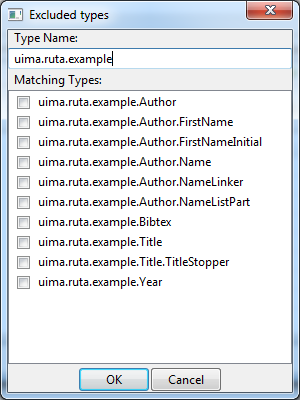

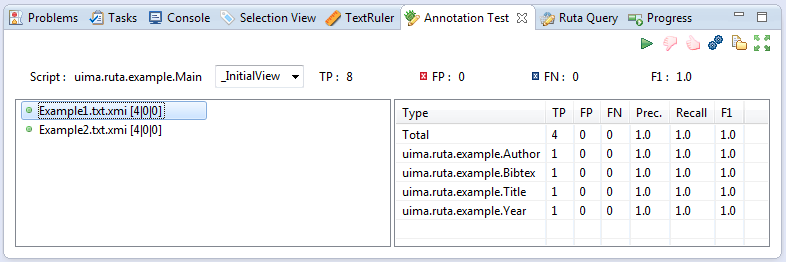

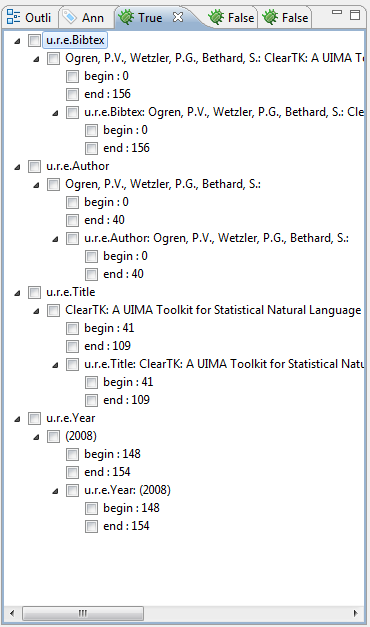

The symbol “%” specifies a different use case.